Generative 3D Models

Generative models have made impressive breakthroughs in the text-to-image task since 2022. Their success comes from the diffusion models trained on billions of image-text pairs. However, such large-scale labeled dataset does not exist for 3D samples and is expensive to acquire.

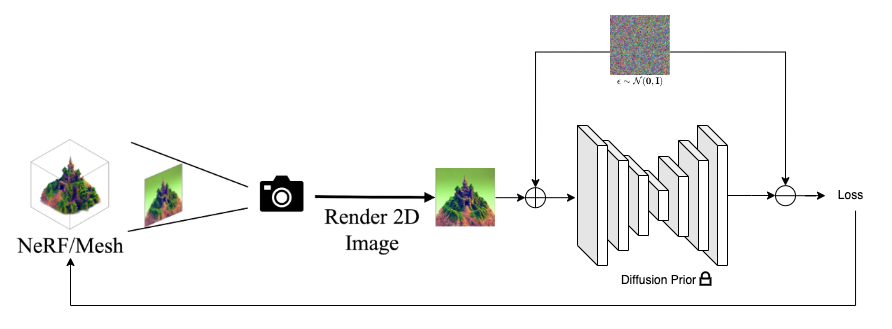

Inspired by DreamFusion, the common practice in handling text-to-3D tasks is to employ a pretrained text-to-image diffusion model for generating 3D content from text in the wild, circumventing the need for any 3D data. The idea is to optimize a single 3D scene $\theta$ or a distribution of 3D scenes $\mu(\theta)$, such that the distribution induced on images rendered from all views aligns, in terms of KL divergence, with the distribution defined by the pretrained 2D diffusion model.

While most of the work in this area utilizes the Score Distillation Sampling (SDS) loss proposed by DreamFusion, a recent contribution by ProlificDreamer introduced the Variational Score Distillation (VSD) loss. Therefore, in the diagram, I use Loss instead of Losssds.

Papers Worth Reading

↓Papers are listed in Descending Time Order.

Project Page | arxiv | Github

Project Page | arxiv | Github

Project Page | arxiv | Github

Project Page | arxiv | Github

Project Page | arxiv

arxiv | Github

Project Page | arxiv | stable-dreamfusion

Highlights

- proposed Score Distillation Sampling (SDS) loss